To receive news from us, please register to the mailing list using this link or the QR code below. You will receive information about our upcoming seminars and the link to participate. Our seminars are on Mondays, 16.30-17.30 CET, and we typically send the link on Monday morning.

EURO – The Association of European Operational Research Societies has a new instrument: the EURO Online Seminar Series (EURO OSS), and this is the page of the EURO OSS on Operational Research and Machine Learning, with website https://euroorml.euro-online.org/ and link to register to the mailinglist at https://forms.gle/YWLb6EPKRQnQert68.

The EURO OSS on Operational Research and Machine Learning is an online seminar series with the goal to brand the role of Operational Research in Artificial Intelligence. The format is a weekly session that takes place every Monday, 16.30-17.30 (CET). It is 100% online-access, and it has leading speakers from Operational Research, as well as neighbouring areas, that will cover important topics such as explainability, fairness, fraud, privacy, etc. We also have the YOUNG EURO OSS on Operational Research and Machine Learning. In each YOUNG session, three junior academics will show their latest results in this burgeoning area. All talks are recorded, and the videos are uploaded to the website.

There are also joint sessions with a so-called “Meet the …” talk plus a lecture. The “Meet the …” talks present either a EURO instrument or another relevant institution to the theme of the EURO OSS. We have approached EURO itself, and the president (in October), Prof Anita Schöbel, gave a few words on the opening of the OSS about EURO and its instruments. We have also organized “Meet the … ” talks with the EURO WISDOM Forum, the EURO Working Group on Continuous Optimization, the EURO Working Group Data Science Meets Optimization, the EURO Journal on Computational Optimization, the European Journal of Operational Research, and the INFORMS Journal on Data Science.

The EURO OSS on Operational Research and Machine Learning is organized by Emilio Carrizosa (IMUS – Instituto de Matemáticas de la Universidad de Sevilla) and Dolores Romero Morales (CBS – Copenhagen Business School) with the collaboration of PhD students Nuria Gómez-Vargas (IMUS), Thomas Halskov (CBS), Ansgar Boss Henrichsen (CBS) and Antonio Navas Orozco (IMUS). The Online Seminar Series is free thanks to the support given by EURO, as well as Universidad de Sevilla (US) and Copenhagen Business School (CBS). This is gratefully acknowledged.

See below the list of speakers in 2024/25 (in alphabetical order):

- Paula Alexandra Amaral, University “Nova de Lisboa”, Portugal

- Bart Baesens, KU Leuven, Belgium

- Marleen Balvert, Tilburg University, The Netherlands

- Immanuel Bomze, University of Vienna, Austria

- Coralia Cartis, University of Oxford, UK

- Bissan Ghaddar, Technical University of Denmark, Denmark

- Manuel Gómez Rodríguez, Max Planck Institute for Software Systems, Germany

- Vanesa Guerrero, Universidad Carlos III de Madrid, Spain

- Georgina Hall, INSEAD, France

- Andrea Lodi, Cornell University, USA

- Francesca Maggioni, University of Bergamo, Italy

- Ruth Misener, Imperial College London, UK

- Luis Nunes Vicente, Lehigh University, USA

- Laura Palagi, Sapienza University of Rome, Italy

- Veronica Piccialli, Sapienza University of Rome, Italy

- David Pisinger, Technical University of Denmark, Denmark

- Rubén Ruiz, Amazon Web Services, Spain

- Bartolomeo Stellato, Princeton University, USA

- Wolfram Wiesemann, Imperial College London, UK

- Roland Wunderling, Gurobi Optimization, Austria

- Yingquian Zhang, Eindhoven University of Technology, The Netherlands

YOUNG EURO OSS on Operational Research and Machine Learning speakers

- Lorenzo Bonasera, University of Pavia, Italy

- Antonio Consolo, Politecnico di Milano, Italy

- Anna Deza, University of California, USA

- Federico D’Onofrio, IASI-CNR, Italy

- Margot Geerts, KU Leuven, Belgium

- Sofie Goethals, Columbia University, USA

- Thomas Halskov, Copenhagen Business School, Denmark

- Esther Julien, TU Delft, The Netherlands

- Marica Magagnini, Università di Camerino, Italy

- Aras Selvi, Imperial College London, UK

- Rick Willemsen, Erasmus University Rotterdam, The Netherlands

- Kimberly Yu, Université de Montréal, Canada

The EURO OSS on Operational Research and Machine Learning is the sequel of a weekly online event that we have run January 2021-May2024, https://congreso.us.es/mlneedsmo/. We had more than 100 speakers mainly from Europe and North America. There were more than 2000 colleagues registered to receive updates. For some of our speakers we have had more than 200 attendees, but there are also quite a few colleagues that watch the videos instead at our YT channel. This YT channel has more than 1000 subscribers, and some of the talks in our Online Seminar Series have more than 1,000 views so far.

March 2, 2026, 16.30 – 17.45 (CET)

VIII YOUNG Session

Explaining Deviations from Prior Knowledge in Cluster Analysis

Speaker 1: Farnaz Farzadnia

Postdoctoral researcher of Economics Department at Copenhagen Business School, Denmark

Abstract:

Cluster analysis is a fundamental tool in data-driven decision-making, yet a persistent challenge lies in updating clusters while maintaining consistency with initial labels as prior knowledge and ensuring that any reassignment is explained. The central motivation for this article comes from a real-world urban planning application, where accurately measuring the safety and comfort of cyclists is essential.

We address this novel clustering problem by proposing a multi-objective mathematical optimization model that simultaneously optimizes three objectives: minimizing intra-cluster dissimilarity to preserve adaptability, minimizing deviations from initial labels to maintain consistency with prior knowledge, and explaining cluster changes at both the item and cluster levels.

We validate the proposed approach in our urban planning application, where we used data from Copenhagen, Denmark, to measure the safety of bicycle lanes. We perform experiments on benchmark datasets as well. Our numerical results illustrate how we can produce adaptable clustering results that are consistent with initial labels and accompanied by clear, rule-based explanations.

Interpretable Surrogates for Optimization

Speaker 2: Sebastian Merten

Research assistant and PhD candidate at the University of Passau, Germany

Abstract:

An important factor in the practical implementation of optimization models is their acceptance by the intended users. This is influenced by various factors, including the interpretability of the solution process. A recently introduced framework for inherently interpretable optimization models proposes surrogates (e.g. decision trees) of the optimization process. These surrogates represent inherently interpretable rules for mapping problem instances to solutions of the underlying optimization model. In contrast to the use of conventional black-box solution methods, the application of these surrogates thus offers an interpretable solution approach. Building on this work, we investigate how we can generalize this idea to further increase interpretability while concurrently giving more freedom to the decision maker. We introduce surrogates which do not map to a concrete solution, but to a solution set instead, which is characterized by certain features. Furthermore, we address the question of how to generate surrogates that are better protected against perturbations. We use the concept of robust optimization to generate decision trees that perform well even in the worst case. For both approaches, exact methods as well as heuristics are presented and experimental results are shown. In particular, the relationship between interpretability and performance is discussed.

Dynamic Temperature Control of Simulated Annealing

Speaker 3: Francesca Da Ros

Polytechnic Department of Engineering and Architecture, University of Udine, Italy

Abstract:

This talk investigates whether the temperature control of Simulated Annealing (SA) can be adapted online in a problem-agnostic way, avoiding reliance on instance-dependent information or costly parameter tuning. The overarching aim is to reduce tuning overhead while maintaining an effective balance between exploration and exploitation throughout the search. To this end, we introduce HHSA, a framework based on hyper-heuristics, where fixed-temperature SA variants are used as low-level components. The approach is assessed using three established hyper-heuristic strategies across four combinatorial optimization domains: k-Graph Coloring, Permutation Flowshop, the Traveling Salesperson Problem, and Facility Location. Experimental results show that HHSA matches or outperforms a carefully tuned SA baseline in three of the four domains considered. Overall, the study provides further evidence that hyper-heuristics can offer robust cross-domain performance, reducing the need for problem-specific parameterization.

March 9, 2026, 16.30 – 17.30 (CET)

eXplainable Artificial Intelligence (XAI): a brief review and some contributions

Speaker: Prof Javier M. Moguerza

Research Centre for Intelligent Information Technologies (CETINIA-DSLAB)

Rey Juan Carlos University, Spain

Abstract:

This talk briefly presents the fundamentals of eXplainable Artificial Intelligence (XAI). First, the different taxonomies of explainable machine learning techniques are described, and then the presentation focuses on those based on individuals, also known as counterfactual observations. The classic concept of counterfactual is defined, some examples are presented, and the notion of a counterfactual set is introduced. Finally, some contributions based on the use of these counterfactual sets and numerical results supporting their effectiveness are described.

March 16, 2026, 16.30 – 17.30 (CET)

Generative Models for Inference and Decision: From Wasserstein Flows to Guided Generation

Speaker: Prof Yao Xie

Coca-Cola Foundation Chair & Professor

H. Milton Stewart School of Industrial and Systems Engineering

Georgia Institute of Technology, USA

Associate Director, Machine Learning Center

Abstract:

Generative models such as normalizing flows and diffusion processes have reshaped how we represent and reason about high-dimensional data, yet their mathematical foundations remain less understood. In this talk, I will present a unified perspective that views generative modeling as flows in probability space and highlights their connections to classical ideas in statistical inference and optimization.

I will begin with the JKO-flow generative model, inspired by the Jordan–Kinderlehrer–Otto scheme for Wasserstein gradient flows, which interprets density learning as proximal gradient descent in the space of probability measures and provides convergence guarantees. I will then discuss guided generative flows, which incorporate data- or objective-driven guidance fields for domain adaptation and inference under uncertainty.

I will also present recent results on worst-case generation formulated as minimax optimization in Wasserstein space, connecting distributionally robust optimization with flow-based modeling. Together, these developments form a principled framework for generative modeling that bridges statistics, machine learning, and operations research.

March 23, 2026, 16.30 – 17.30 (CET)

OR with a White Hat: Evidencing Privacy Vulnerabilities in ML Models

Speaker: Prof Thibaut Vidal

SCALE-AI Chair in Data-Driven Supply Chains

Professor at the Department of Mathematics and Industrial Engineering (MAGI) of Polytechnique Montréal, Canada

Abstract:

The deployment of machine learning models in high-stakes domains (e.g., finance, medicine) raises profound questions about the privacy of the data used to train them. In this talk, I will present a broader perspective on how operations research can provide a rigorous methodological backbone for analyzing, quantifying, and ultimately mitigating privacy risks in modern ML pipelines. I will first discuss a white-box reconstruction attack that formulates the recovery of a random forest training data as a maximum-likelihood combinatorial problem solved with constraint programming. Remarkably, this approach can often reconstruct entire datasets, even from forests with only a few trees. Next, we turn to black-box access and explainability-driven interfaces. Counterfactual explanations (now increasingly needed and exposed through ML APIs) represent a powerful attack surface. Using tools from online optimization and competitive analysis, we derive tight bounds on the number of counterfactual queries required to exactly extract tree-based models and introduce new algorithms achieving provably perfect fidelity. Finally, I will examine the protection offered by differential privacy. Focusing on ε-DP random forests, we demonstrate that even models satisfying strict DP guarantees can still leak meaningful, dataset-specific information in practice, unless the privacy noise is increased to the point where the model loses most of its predictive value. Together, the talk highlights critical tensions between predictive performance, explainability, and privacy protection, and showcases OR-based techniques as powerful instruments for navigating these trade-offs.

April 13, 2026, 16.30 – 17.30 (CET)

Optimization Over Trained Neural Networks: What, Why, and How?

Speaker: Prof Thiago Serra Azevedo Silva

Assistant Professor of Business Analytics

Tippie College of Business

University of Iowa, USA

Abstract:

Constraint learning is a new paradigm of modeling optimization problems with the help of machine learning. For example, we can learn constraints empirically from available data, or we can avoid writing a nonlinear objective function by learning a simpler approximation for it. We usually achieve that by using a neural network as part of the optimization model. As a consequence, we need to design optimization algorithms that take into account the structure of neural networks. We show how polyhedral theory and network pruning can help us solve those problems more efficiently, but that’s just the beginning!

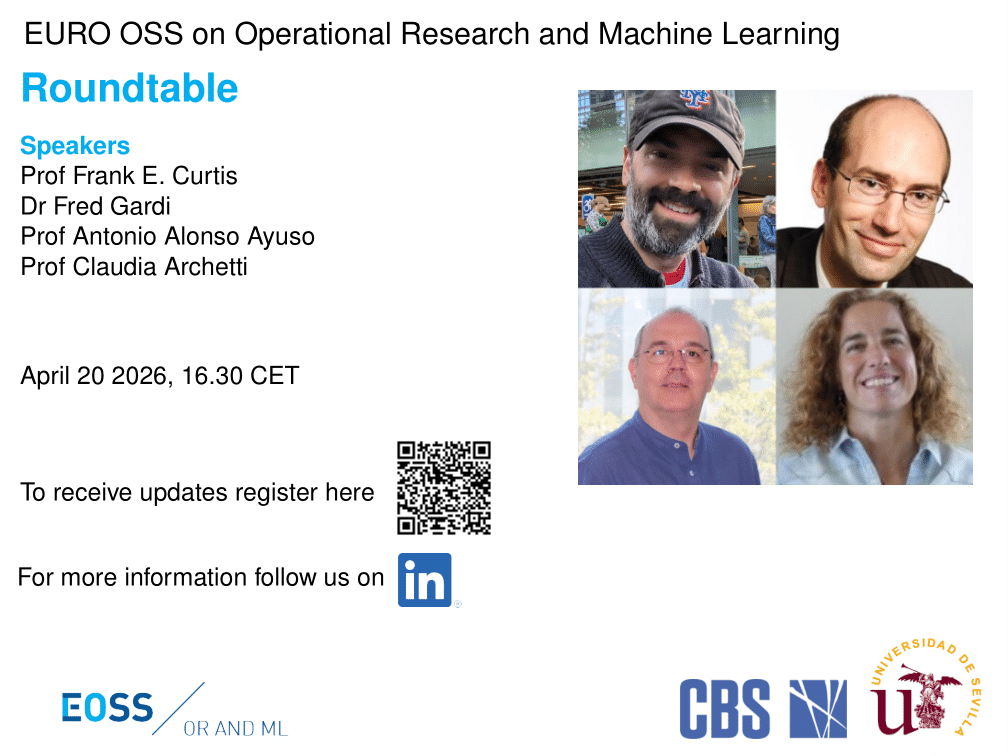

April 20, 2026, 16.30 – 17.30 (CET)

Beyond MILP: A Hybrid Approach to Large-Scale Real-World Optimization with Hexaly

Speaker: Dr Fred Gardi

Founder & CEO of Hexaly, France

Abstract:

Mixed-Integer Linear Programming (MILP) solvers have long dominated Operations Research (OR), yet they frequently struggle with large-scale, highly discrete, non-convex, or mixed-variable problems due to limitations in modeling expressiveness and scalability.

Hexaly embodies a post-MILP paradigm through a hybrid global solver that combines heuristic and exact methods, integrating concepts from Mixed-Integer Programming (MIP), Non-Linear Programming (NLP), Constraint Programming (CP), and Black-Box Optimization (BBO). By eliminating the need for linearization and departing from traditional tree-search paradigms, Hexaly achieves superior speed and scalability on real-world instances, routinely delivering high-quality solutions in minutes where conventional MILP solvers fail to produce feasible results.

This talk introduces the core principles of this hybrid post-MILP approach and discusses its implications for bridging OR with modern data-driven decision-making, particularly in enabling stochastic, robust, and bilevel optimization modeling, as well as coupling simulation and machine learning with mathematical optimization.

April 27, 2026, 16.30 – 17.30 (CET)

Machine Learning for Faster Matheuristics: Perspectives and Advances

Speaker: Prof Emma Frejinger

Université de Montréal, Canada

Abstract:

There is a rapidly growing literature on using machine learning to either predict solutions to mathematical programs or accelerate general-purpose solvers. In this talk, we present our recent work in this area and introduce instance generators designed for benchmarking. We also situate our results within the broader literature on integrating machine learning with optimization, highlighting key findings and outlining promising directions for future research.

October 14, 2024, 16.30 – 17.30 (CET)

Using AI for Fraud Detection: Recent Research Insights and Emerging Opportunities

Abstract:

Typically, organizations lose around five percent of their revenue to fraud. In this presentation, we explore advanced AI techniques to address this issue. Drawing on our recent research, we begin by examining cost-sensitive fraud detection methods, such as CS-Logit which integrates the economic imbalances inherent in fraud detection into the optimization of AI models. We then move on to data engineering strategies that enhance the predictive capabilities of both the data and AI models through intelligent instance and feature engineering. We also delve into network data, showcasing our innovative research methods like Gotcha and CATCHM for effective data featurization. A significant focus is placed on Explainable AI (XAI), which demystifies high-performance AI models used in fraud detection, aiding in the development of effective fraud prevention strategies. We provide practical examples from various sectors including credit card fraud, anti-money laundering, insurance fraud, tax evasion, and payment transaction fraud. Furthermore, we discuss the overarching issue of model risk, which encompasses everything from data input to AI model deployment. Throughout the presentation, the speaker will thoroughly discuss his recent research, conducted in partnership with leading global financial institutions such as BNP Paribas Fortis, Allianz, ING, and Ageas.

October 28, 2024, 16.30 – 17.30 (CET)

Verifying message-passing neural networks via topology-based bounds tightening

Speaker: Prof Ruth Misener

BASF / Royal Academy of Engineering Research Chair in Data Driven Optimisation

Department of Computing

Imperial College London, UK

Abstract:

Since graph neural networks (GNNs) are often vulnerable to attack, we need to know when we can trust them. We develop a computationally effective approach towards providing robust certificates for message-passing neural networks (MPNNs) using a Rectified Linear Unit (ReLU) activation function. Because our work builds on mixed-integer optimization, it encodes a wide variety of subproblems, for example it admits (i) both adding and removing edges, (ii) both global and local budgets, and (iii) both topological perturbations and feature modifications. Our key technology, topology-based bounds tightening, uses graph structure to tighten bounds. We also experiment with aggressive bounds tightening to dynamically change the optimization constraints by tightening variable bounds. To demonstrate the effectiveness of these strategies, we implement an extension to the open-source branch-and-cut solver SCIP. We test on both node and graph classification problems and consider topological attacks that both add and remove edges.

This work is joint with Christopher Hojny, Shiqiang Zhang, and Juan Campos.

November 4, 2024, 16.30 – 17.30 (CET)

I YOUNG Session

K-anonymous counterfactual explanations

Speaker 1: Sofie Goethals

Postdoctoral researcher at Columbia Business School, USA

University of Antwerp, Belgium

Abstract:

Black-box machine learning models are used in an increasing number of high-stakes domains, and this creates a growing need for Explainable AI (XAI). However, the use of XAI in machine learning introduces privacy risks, which currently remain largely unnoticed. Therefore, we explore the possibility of an explanation linkage attack, which can occur when deploying instance-based strategies to find counterfactual explanations. To counter such an attack, we propose k-anonymous counterfactual explanations and introduce pureness as a metric to evaluate the validity of these k-anonymous counterfactual explanations. Our results show that making the explanations, rather than the whole dataset, k-anonymous, is beneficial for the explanation quality.

Neur2BiLO: Neural Bilevel Optimization

Speaker 2: Esther Julien

PhD Student at TU Delft, The Netherlands

Abstract:

Bilevel optimization deals with nested problems in which a leader takes the first decision to minimize their objective function while accounting for a follower’s best-response reaction. Constrained bilevel problems with integer variables are particularly notorious for their hardness. While exact solvers have been proposed for mixed-integer linear bilevel optimization, they tend to scale poorly with problem size and are hard to generalize to the non-linear case. On the other hand, problem-specific algorithms (exact and heuristic) are limited in scope. Under a data-driven setting in which similar instances of a bilevel problem are solved routinely, our proposed framework, Neur2BiLO, embeds a neural network approximation of the leader’s or follower’s value function, trained via supervised regression, into an easy-to-solve mixed-integer program. Neur2BiLO serves as a heuristic that produces high-quality solutions extremely fast for linear/non-linear, integer/mixed-integer bilevel problems.

On constrained mixed-integer DR-submodular minimization

Speaker 3: Qimeng (Kim) Yu

Department of Computer Science and Operations Research (DIRO), Université de Montréal, Canada

Abstract:

Submodular set functions play an important role in integer programming and combinatorial optimization. Increasingly, applications call for generalized submodular functions defined over general integer or continuous domains. Diminishing returns (DR)–submodular functions are one of such generalizations, which encompass a broad class of functions that are generally nonconvex and nonconcave. We study the problem of minimizing any DR-submodular function with continuous and general integer variables under box constraints and, possibly, additional monotonicity constraints. We propose valid linear inequalities for the epigraph of any DR-submodular function under the constraints. We further provide the complete convex hull of such an epigraph, which, surprisingly, turns out to be polyhedral. We propose a polynomial-time exact separation algorithm for our proposed valid inequalities with which we first establish the polynomial-time solvability of this class of mixed-integer nonlinear optimization problems.

November 11, 2024, 16.30 – 17.30 (CET)

Need to relax - but perhaps later? Reflections on modeling sparsity and mixed-binary nonconvex problems

Abstract:

In some ML communities, researchers claim that obtaining local solutions of optimality criteria is often sufficient to provide a meaningful and accurate data model in real-world analytics. However, this is simply incorrect and sometimes dangerously misleading, particularly when it comes to highly structured problems involving non-convexity such as discrete decisions (binary variables). This talk will advocate the necessity of research efforts in the quest for global solutions and strong rigorous bounds for quality guarantees, showcased on one of the nowadays most popular domains — cardinality-constrained models. These models try to achieve fairness, transparency and explainability in AI applications, ranging from Math.Finance/Economics to social and life sciences.

From a computational viewpoint, it may be tempting to replace the zero-norm (number of nonzero variables) with surrogates, for the benefit of tractability. We argue that these relaxations come too early. Instead, we propose to incorporate the true zero-norm into the base model and treat this either by MILP relaxations or else by lifting to tractable conic optimization models. Both in practice and in theory, these have proved to achieve much stronger bounds than the usual LP-based ones, and therefore they may, more reliably and based upon exact arguments, assess the quality of proposals coming from other techniques in a more precise way. With some effort invested in the theory (aka later relaxations), the resulting models are still scalable and would guarantee computational performance closer to reality and/or optimality.

November 18, 2024, 16.30 – 17.30 (CET)

Large scale Minimum Sum of Squares Clustering with optimality guarantees

Abstract:

Minimum Sum of Squares clustering is one of the most important problems in unsupervised learning and aims to partition n observations into k clusters to minimize the sum of squared distances from the points to the centroid of their cluster. The MSSC commonly arises in a wide range of disciplines and applications, and since it is an unsupervised task it is vital to certify the quality of the produced solution. Recently, exact approaches able to solve small-medium size instances have been proposed, but when the dimension of the instance grows, they become impractical. In this work, we try and exploit the ability to solve small instances to certify the quality of heuristic solutions for large-scale datasets. The fundamental observation is that the minimum MSSC of the union of disjoint subsets is greater than or equal to the sum of the MSSC of the individual subsets. This implies that summing up the optimal value of the MSSC on disjoint subsets of a dataset provides a lower bound on the optimal WSS for the entire dataset. The quality of the lower bound is strongly dependent on how the dataset is partitioned into disjoint subsets. To improve this lower bound, we aim to maximize the minimum WSS of each subset by creating subsets of points with high dissimilarity. This approach, known as anticlustering or maximally diverse grouping problem in the literature, seeks to form highly heterogeneous partitions of a given dataset. By approximately solving an anticlustering problem, we develop a certification process to validate clustering solutions obtained using a heuristic algorithm. We test our method on large-scale instances with datasets ranging from 2,000 to 10,000 data points and containing 2 to 500 features. Our procedure consistently achieved a gap between the clustering solution and the lower bound ranging from 1% to 5%.

November 25, 2024, 16.30 – 17.30 (CET)

Learning to Branch and to Cut: Enhancing Non-Linear Optimization with Machine Learning

Speaker: Prof Bissan Ghaddar

Associate Professor, Management Science & Sustainability

John. M. Thompson Chair in Engineering Leadership & Innovation

Ivey Business School, Canada

Abstract:

The use of machine learning techniques to improve the performance of branch-and-bound optimization algorithms is a very active area in the context of mixed integer linear problems, but little has been done for non-linear optimization. To bridge this gap, we develop a learning framework for spatial branching and show its efficacy in the context of the Reformulation-Linearization Technique for polynomial optimization problems. The proposed learning is performed offline, based on instance-specific features and with no computational overhead when solving new instances. Novel graph-based features are introduced, which turn out to play an important role in the learning. Experiments on different benchmark instances from MINLPLib and QPLIB show that the learning-based branching selection and learning-based constraint generation significantly outperform the standard approaches.

December 2, 2024, 16.30 – 17.30 (CET)

Pragmatic OR: Solving large scale optimization problems in fast moving environments

Speaker: Prof Rubén Ruiz

Principal Applied Scientist at Amazon Web Services (AWS)

Professor of Statistics and Operations Research, Universitat Politècnica de València (on leave), Spain

Abstract:

This talk examines the gap between academic Operations Research and real-world industrial applications, particularly in environments like Amazon and AWS where sheer scale and delivery speed are important factors to consider. While academic research often prioritizes complex algorithms and optimal solutions, large-scale industrial problems demand more pragmatic approaches. These real-world scenarios frequently involve multiple objectives, soft constraints, and rapidly evolving business requirements that require flexibility and quick adaptation.

We argue for the use of heuristic solvers and simplified modeling techniques that prioritize speed, adaptability, and ease of implementation over strict optimality or complex approaches. This angle is particularly valuable when dealing with estimated input data, where pursuing optimality may be less meaningful.

The presentation will showcase various examples, including classical routing and scheduling problems, as well as more complex scenarios like virtual machine placement in Amazon EC2. These cases illustrate how pragmatic methods can effectively address real-world challenges, offering robust and maintainable solutions that balance performance with operational efficiency. The goal is to demonstrate that in many industrial applications, a small optimality gap is an acceptable trade-off for significantly improved flexibility and reduced operational overhead.

December 9, 2024, 16.30 – 17.30 (CET)

From Data to Donations: Optimal Fundraising Campaigns for Non-Profit Organizations

Speaker: Prof Wolfram Wiesemann

Professor of Analytics & Operations

Imperial College Business School

Imperial College

UK

Abstract:

Non-profit organizations play an essential role in addressing global challenges, yet their financial sustainability often depends on raising funds through resource-intensive campaigns. We partner with a major international non-profit to develop and test data-driven approaches to enhance the efficiency of their fundraising efforts. The organization conducts multiple annually recurring campaigns, with thematic links between them. These connections enable us to predict a donor’s propensity to contribute to one campaign based on their behavior in others. This structure is common among non-profits but not readily utilized by conventional multi-armed bandit algorithms. To leverage these inter-campaign patterns, we design two algorithms that integrate concepts from the multi-armed bandit and clustering literature. We analyze the theoretical properties of both algorithms, and we empirically validate their effectiveness on both synthetic and real data from our partner organization.

January 13, 2025, 16.30 – 17.30 (CET)

The differentiable Feasibility Pump

Speaker: Prof Andrea Lodi

Andrew H. and Ann R. Tisch Professor

Jacobs Technion-Cornell Institute

Cornell University

USA

Abstract:

Although nearly 20 years have passed since its conception, the feasibility pump algorithm remains a widely used heuristic to find feasible primal solutions to mixed-integer linear problems. Many extensions of the initial algorithm have been proposed. Yet, its core algorithm remains centered around two key steps: solving the linear relaxation of the original problem to obtain a solution that respects the constraints, and rounding it to obtain an integer solution. This paper shows that the traditional feasibility pump and many of its follow-ups can be seen as gradient-descent algorithms with specific parameters. A central aspect of this reinterpretation is observing that the traditional algorithm differentiates the solution of the linear relaxation with respect to its cost. This reinterpretation opens many opportunities for improving the performance of the original algorithm. We study how to modify the gradient-update step as well as extending its loss function. We perform extensive experiments on MIPLIB instances and show that these modifications can substantially reduce the number of iterations needed to find a solution. (Joint work with M. Cacciola, A. Forel, A. Frangioni).

January 20, 2025, 16.30 – 17.30 (CET)

Introducing the Cloud of Spheres Classification: An Alternative to Black-Box Models

Speaker: Prof Paula Alexandra Amaral

Associate Professor, Department of Mathematics & Nova Math

Nova School of Science & Technology

University “Nova de Lisboa”

Portugal

Abstract:

Machine learning models have been widely applied across various domains, often without critically examining the underlying mechanisms. Black-box models, such as Deep Neural Networks, pose significant challenges in terms of counterfactual analysis, interpretability, and explainability. In situations where understanding the rationale behind a model’s predictions is essential, exploring more transparent machine learning techniques becomes highly advantageous.

In this presentation, we introduce a novel binary classification model called a Cloud of Spheres. The model is formulated as a Mixed-Integer Nonlinear Programming (MINLP) problem that seeks to minimize the number of spheres required to classify data points. This approach is particularly well-suited for scenarios where the structure of the target class is highly non-linear and non-convex, but it can also adapt to cases with linear separability.

Unlike neural networks, this classification model retains data in its original feature space, eliminating the need for kernel functions or extensive hyperparameter tuning. Only one parameter may be required if the objective is to maximize the separation margin, similar to Support Vector Machines (SVMs). By avoiding black-box complexity, the model enhances transparency and interpretability.

A significant challenge with this method lies in finding the global optima for large datasets. To address this, we propose a heuristic approach that has demonstrated good performance on a benchmark set. When compared to state-of-the-art algorithms, the heuristic delivers competitive results, showcasing the model’s effectiveness and practical applicability.

January 27, 2025, 16.30 – 17.30 (CET)

II YOUNG Session

A unified approach to extract interpretable rules from tree ensembles via Integer Programming

Speaker 1: Dr Lorenzo Bonasera

Research Scientist at Aerospace Center (DLR) – Institute for AI Safety and Security, Germany

Abstract:

Tree ensemble methods represent a popular machine learning model, known for their effectiveness in supervised classification and regression tasks. Their performance derives from aggregating predictions of multiple decision trees, which are renowned for their interpretability properties. However, tree ensemble methods do not reliably exhibit interpretable output. Our work aims to extract an optimized list of rules from a trained tree ensemble, providing the user with a condensed, interpretable model that retains most of the predictive power of the full model. Our approach consists of solving a partitioning problem formulated through Integer Programming. The proposed method works with either tabular or time series data, for both classification and regression tasks, and it can include any kind of nonlinear loss function. Through rigorous computational experiments, we offer statistically significant evidence that our method is competitive with other rule extraction methods, and it excels in terms of fidelity towards the opaque model.

A decomposition algorithm for sparse and fair soft regression trees

Speaker 2: Dr Antonio Consolo

Postdoctoral researcher at Politecnico di Milano, Department of Electronics, Information and Bioengineering, Italy

Abstract:

In supervised Machine Learning (ML) models, achieving sparsity in input features is crucial not only for feature selection but also for enhancing model interpretability and potentially improving testing accuracy. Another crucial aspect is to take into account fairness measures, which allows to guarantee comparable performance for individuals belonging to sensible groups. This talk focuses on sparsity and fairness in decision trees for regression tasks, a widely used interpretable ML model.

We recently proposed a new model variant for Soft Regression Trees (SRTs), which are decision trees with probabilistic decision rules at each branch node and a linear regression at each leaf node. Unlike in the previous SRTs approaches, in our variant each leaf node provides a prediction with its associated probability but, for any input vector, the output of the SRT is given by the regression associated with a single leaf node, namely, the one reached by following the branches with the highest probability. Our SRT variant exhibits the “conditional computation” property (a small portion of the tree architecture is activated when generating a prediction for a given data point), provides posterior probabilities, and is well-suited for decomposition. The convergent decomposition training algorithm turned out to yield accurate soft trees in short cpu time.

In this work, we extend the SRT model variant and the decomposition training algorithm to take into account model sparsity and fairness measures. Experiments on datasets from the UCI and KEEL repositories indicate that our approach effectively induces sparsity. Computational results on different datasets show that the decomposition algorithm, adapted to take into account fairness constraints, yield accurate SRTs which guarantee comparable performance between sensitive groups, without significantly affecting the computational load.

Evaluating Large Language Models for Real Estate Valuation: Insights into Performance and Interpretability

Speaker 3: Margot Geerts

PhD student at Research Centre for Information Systems Engineering (LIRIS), Faculty of Economics and Business, KU Leuven, Belgium

Abstract:

The real estate market is vital globally, yet stakeholders often struggle with information asymmetry in property valuation. This study explores how Large Language Models (LLMs) can improve transparency by accurately predicting house prices and providing insights into predictions. Evaluating GPT-4o-mini, Llama 3, and others across diverse datasets, we find that LLMs leverage hedonic variables effectively, achieving accuracy comparable to Gradient Boosted Trees and surpassing k-Nearest Neighbors. Tailored prompting strategies enhance their performance, with market-specific adjustments remaining crucial. While LLM explanations align with state-of-the-art models, their prediction intervals are often overconfident, revealing limitations in reliability and spatial reasoning. This research underscores LLMs’ potential to reduce knowledge gaps, offering accessible, data-driven insights for more transparent valuations.

February 3, 2025, 16.30 – 17.30 (CET)

Bridging smooth regression and mathematical optimization

Speaker: Prof Vanesa Guerrero

Associate Professor

Department of Statistics

Universidad Carlos III de Madrid

Spain

Abstract:

In today’s data-driven decision-making landscape, it is crucial to develop systems that integrate human knowledge and provide valuable support to the decider. This talk explores the intersection of statistical modeling and mathematical optimization to address the estimation of smooth functions which verify structural properties (e.g. about sign, monotonicity or curvature). By leveraging these shape-constrained functions, we construct surrogate models for complex functions, enabling their use in mixed-integer nonlinear programming (MINLP). This approach exploits separability to enhance tractability, offering a practical framework for tackling challenging optimization problems in real-world applications.

February 10, 2025, 16.30 – 17.30 (CET)

Counterfactual Token Generation in Large Language Models

Abstract:

“Sure, I am happy to generate a story for you: Captain Lyra stood at the helm of her trusty ship, the Maelstrom’s Fury, gazing out at the endless sea. […] Lyra’s eyes welled up with tears as she realized the bitter truth – she had sacrificed everything for fleeting riches, and lost the love of her crew, her family, and herself.” Although this story, generated by a large language model, is captivating, one may wonder—how would the story have unfolded if the model had chosen “Captain Maeve” as the protagonist instead? We cannot know. State-of-the-art large language models are stateless—they maintain no internal memory or state. Given a prompt, they generate a sequence of tokens as an output using an autoregressive process. As a consequence, they cannot reason about counterfactual alternatives to tokens they have generated in the past. In this work, our goal is to enhance them with this functionality. To this end, we develop a causal model of token generation that builds upon the Gumbel-Max structural causal model. Our model allows any large language model to perform counterfactual token generation at almost no cost in comparison with vanilla token generation, it is embarrassingly simple to implement, and it does not require any fine-tuning nor prompt engineering. We implement our model on Llama 3 8B-Instruct and Ministral-8B-Instruct and conduct a qualitative and a quantitative analysis of counterfactually generated text. We conclude with a demonstrative application of counterfactual token generation for bias detection, unveiling interesting insights about the model of the world constructed by large language models.

February 17, 2025, 16.30 – 17.30 (CET)

II YOUNG Session

Accelerating Benders decomposition for the p-median problem through variable aggregation

Speaker 1: Rick Willemsen

PhD candidate at Erasmus University Rotterdam, The Netherlands

Abstract:

The p-median problem is a classical location problem where the goal is to select p facilities while minimizing the sum of distances from each location to its nearest facility. Recent advancements in solving the p-median and related problems have successfully leveraged Benders decomposition methods. The current bottleneck is the large number of variables and Benders cuts that are needed. We consider variable aggregation to reduce the size of these models. We propose to partially aggregate the variables in the model based on a start solution; aggregation occurs only when the corresponding locations are assigned to the same facility in the initial solution. In addition, we propose a set of valid inequalities tailored to these aggregated variables. Our computational experiments indicate that our model, post-initialization, provides a stronger lower bound, thereby accelerating the resolution of the root node. Furthermore, this approach seems to positively impact the branching procedure, leading to an overall faster Benders decomposition method.

Fair and Accurate Regression: Strong Formulations and Algorithms

Speaker 2: Anna Deza

IEOR PhD candidate at UC Berkeley, California, USA

Abstract:

We introduce mixed-integer optimization methods to solve regression problems with fairness metrics. To tackle this computationally hard problem, we study the polynomially-solvable single-factor and single-observation subproblems as building blocks and derive their closed convex hull descriptions. Strong formulations obtained for the general fair regression problem in this manner are utilized to solve the problem with a branch-and-bound algorithm exactly or as a relaxation to produce fair and accurate models rapidly. To handle large-scale instances, we develop a coordinate descent algorithm motivated by the convex-hull representation of the single-factor fair regression problem to improve a given solution efficiently. Numerical experiments show competitive statistical performance with state-of-the-art methods while significantly reducing training times.

A Model-Agnostic Framework for Collective and Sparse “Black-Box” Explanations

Speaker 3: Thomas Halskov

PhD Fellow, Copenhagen Business School, Denmark

Abstract:

The widespread adoption of “black-box” models in critical applications highlights the need for transparent and consistent explanations. While local explainer methods like LIME offer insights for individual predictions, they do not consider global constraints, such as continuity and sparsity. We develop a global explanation framework that enforces these constraints on top of local explanations. We illustrate on real-world datasets that we can generate stable explanations across multiple observations, while maintaining desirable global properties.

February 24, 2025, 16.30 – 17.30 (CET)

Data-Driven Algorithm Design and Verification for Parametric Convex Optimization

Speaker: Prof Bartolomeo Stellato

Assistant Professor

Department of Operations Research and Financial Engineering

Princeton University

USA

Abstract:

We present computational tools to analyze and design first-order methods in parametric convex optimization. First-order methods are popular for their low per-iteration cost and warm-starting capabilities. However, precisely quantifying the number of iterations needed to compute high-quality solutions remains a key challenge, especially in fast applications where real-time execution is crucial. In the first part of the talk, we introduce a numerical framework for verifying the worst-case performance of first-order methods in parametric quadratic optimization. We formulate the verification problem as a mixed-integer linear program where the objective is to maximize the infinity norm of the fixed-point residual after a given number of iterations. Our approach captures a wide range of gradient, projection, proximal iterations through affine or piecewise affine constraints. We derive tight polyhedral convex hull formulations of the constraints representing the algorithm iterations. To improve the scalability, we develop a custom bound tightening technique combining interval propagation, operator theory, and optimization-based bound tightening. Numerical examples show that our method closely matches the true worst-case performance by providing several orders of magnitude reductions in the worst-case fixed-point residuals compared to standard convergence analyses. In the second part of the talk, we present a data-driven approach to analyze the performance of first-order methods using statistical learning theory. We build generalization guarantees for classical optimizers, using sample convergence bounds, and for learned optimizers, using the Probably Approximately Correct (PAC)-Bayes framework. We then use this framework to learn accelerated first-order methods by minimizing the PAC-Bayes bound directly over the key parameters of the algorithms (e.g., gradient steps, and warm-starts). Numerical experiments show that our approach provides strong generalization guarantees for both classical and learned optimizers with statistical bounds that are very close to the true out of sample performance.

March 3, 2025, 16.30 – 17.30 (CET)

Pareto sensitivity, most-changing sub-fronts, and knee solutions

Speaker: Prof Luis Nunes Vicente

Timothy J. Wilmott Endowed Chair Professor and Department Chair

Department of Industrial and Systems Engineering

Lehigh University

USA

Abstract:

When dealing with a multi-objective optimization problem, obtaining a comprehensive representation of the Pareto front can be computationally expensive. Furthermore, identifying the most representative Pareto solutions can be difficult and sometimes ambiguous. A popular selection are the so-called Pareto knee solutions, where a small improvement in any objective leads to a large deterioration in at least one other objective. In this talk, using Pareto sensitivity, we show how to compute Pareto knee solutions according to their verbal definition of least maximal change. We refer to the resulting approach as the sensitivity knee (snee) approach, and we apply it to unconstrained and constrained problems. Pareto sensitivity can also be used to compute the most-changing Pareto sub-fronts around a Pareto solution, where the points are distributed along directions of maximum change, which could be of interest in a decision-making process if one is willing to explore solutions around a current one. Our approach is still restricted to scalarized methods, in particular to the weighted-sum or epsilon-constrained methods, and require the computation or approximations of first- and second-order derivatives. We include numerical results from synthetic problems and multi-task learning instances that illustrate the benefits of our approach.

March 10, 2025, 16.30 – 17.30 (CET)

Concepts from cooperative game theory for feature selection

Speaker: Prof Marleen Balvert

Associate professor Operations Research & Machine Learning

Zero Hunger Lab, Tilburg University

The Netherlands

Abstract:

In classification problems, one is often interested in finding the features that determine the class of a sample. One approach is to first train a classification model – such as a random forest or a support vector machine – and then compute feature importance scores using e.g. SHAP or LIME. SHAP is a popular approach to compute feature importance scores, based on the concept of the Shapley value from cooperative game theory. Following the idea of using the Shapley value for feature importance scores, we look into the applicability of other metrics from cooperative game theory as feature importance scores.

March 17, 2025, 16.30 – 17.30 (CET)

Offline Reinforcement Learning for Combinatorial Optimization: A Data-Driven End-to-End Approach to Job Shop Scheduling

Speaker: Prof Yingqian Zhang

Associate Professor of AI for Decision Making

Department of Industrial Engineering and Innovation Sciences

Eindhoven University of Technology

The Netherlands

Abstract:

The application of (deep) reinforcement learning (RL) to solve combinatorial optimization problems (COPs) has become a very active research field in AI and OR over the past five years. Most existing approaches rely on online RL methods, where an agent learns by interacting with a surrogate environment, typically implemented as a simulator or simply a function. While online RL methods have demonstrated their effectiveness for many COPs, they suffer from two major limitations: sample inefficiency, requiring numerous interactions to learn effective policies, and inability to leverage existing data, including (near-optimal) solutions generated by well-established algorithms. In contrast, Offline (or data-driven) RL learns directly from pre-existing data, offering a promising alternative. In this talk, I will present the first fully end-to-end offline RL method for solving (flexible) job shop scheduling problems (JSSPs). Our approach trains on data generated by applying various algorithms to JSSP instances. We show that, with only 100 training instances, our offline RL approach achieves comparable or better performance to its online RL counterparts and outperforms the algorithms used to generate the training data.

March 24, 2025, 16.30 – 17.30 (CET)

Linear and nonlinear learning of optimization problems

Speaker: Prof Coralia Cartis

Professor of Numerical Optimization

Mathematical Institute

University of Oxford

United Kingdom

Abstract:

We discuss various ways of improving scalability and performance of optimisation algorithms when special structure is present: from linear embeddings to nonlinear ones, from deterministic to stochastic approaches. Both local and global optimization methods will be addressed.

March 31, 2025, 16.30 – 17.30 (CET)

Feature selection in linear Support Vector Machines via a hard cardinality constraint: a scalable conic decomposition approach

Speaker: Prof Laura Palagi

Department of Computer, Control and Management Engineering A. Ruberti

Sapienza University of Rome

Italy

Abstract:

In this talk, we present the embedded feature selection problem in linear Support Vector Machines (SVMs), in which an explicit cardinality constraint is employed. The aim is to obtain an interpretable classification model.

The problem is NP-hard due to the presence of the cardinality constraint, even though the original linear SVM amounts to a problem solvable in polynomial time. To handle the hard problem, we introduce two mixed-integer formulations for which novel semidefinite relaxations are proposed. Exploiting the sparsity pattern of the relaxations, we decompose the problems and obtain equivalent relaxations in a much smaller cone, making the conic approaches scalable. We propose heuristics using the information on the optimal solution of the relaxations. Moreover, an exact procedure is proposed by solving a sequence of mixed-integer decomposed semidefinite optimization problems. Numerical results on classical benchmarking datasets are reported, showing the efficiency and effectiveness of our approach.

Joint paper with Immanuel Bomze, Federico D’Onofrio, Bo Peng.

April 7, 2025, 16.30 – 17.30 (CET)

Deep generative models in stochastic optimization

Abstract:

Many important decisions are taken under uncertainty since we do not know the development of various parameters. In particular, the ongoing green transition requires large and urgent societal investments in new energy modes, infrastructure and technology. The decisions are spanning over a very long time-horizon, and there are large uncertainty towards energy prices, demand of energy, and production from renewable sources.

Stochastic optimization methods can help us make better investment decisions. However, the found results are limited by the quality of the scenarios we use as input.

Recently, deep generative models have been developed that can generate a plenitude of realistic scenarios, but current solvers can frequently not handle more than a handful of scenarios before the model becomes too complex to solve.

In the present talk we will show how to integrate deep generative models as part of the solution process in stochastic optimization, such that we both get faster solution times and more well-founded decisions.

Computational results will be presented for some 2-stage optimization problems, showing the benefits of the integrated approach. The studied problems include 2-stage facility location problems and 2-stage transportation problems.

The research is part of the ERC advanced grant project “DECIDE”.

April 28, 2025, 16.30 – 17.30 (CET)

IV YOUNG Session

Speaker 1: Marica Magagnini

PhD student, Università di Camerino, School of Science and Technology, Italy.

Abstract:

Machine Learning algorithms usually make decisions without providing explanations of how those decisions were made. As a result, such models lack user understanding and trust. Furthermore, the inherent variability and noise present in real-world scenarios frequently manifest as feature uncertainty in data, posing a common challenge in machine learning.

In sensitive contexts, such as those involving uncertain data, generating robust explanations is crucial. These explanations must account for the variability of uncertainty while still offering flexibility.

Counterfactuals are user-friendly explanations that provide valuable information to determine what should be changed in order to modify the outcome of a black box decision-making model without revealing the underlying algorithmic details. We propose an optimization problem to obtain counterfactual explanations when a k-Nearest Neighbors classifier is employed in a binary classification. The geometric framework of the problem supports the quality of the explanations.

Mixture of Gaussians as a Near-Optimal Mechanism in Approximate Differential Privacy

Speaker 2: Aras Selvi

PhD student, Analytics & Operations, Imperial College Business School, UK

Abstract:

We design a class of additive noise mechanisms that satisfy (ε, δ)-differential privacy (approximate DP) for scalar real-valued query functions whose sensitivities are known. Our mechanisms, named mixture mechanisms, are obtained by taking the mixture of an odd number of (quasi-)Gaussian distributions. Our mechanisms achieve significantly smaller expected noise amplitudes (l1-loss) and variances (l2-loss for zero-mean distributions) compared to best known mechanisms. We develop exact (for 3 peaks) and approximate (for more peaks) logarithmic-time algorithms to tune the mixture weights and variances of the Gaussian distributions used in our mechanism, to minimize an expected loss while satisfying a pre-specified level of privacy guarantee. We illustrate via numerical experiments that our approach attains near-optimal expected losses that are almost equal to theoretical lower bounds in various privacy regimes.

Co-authors: Huikang Liu, Aras Selvi, and Wolfram Wiesemann.

Speaker 3: Federico D’Onofrio

PhD Student, IASI-CNR, Istituto di Analisi dei Sistemi ed Informatica “A. Ruberti”, Italy

Abstract:

In this talk, we focus on embedded feature selection methods for nonlinear Support Vector Machines (SVMs) in classification tasks. Our framework is based on the dual formulation of SVMs with nonlinear kernels, which enables the modeling of complex relationships among variables. While many existing approaches rely on linear models and continuous relaxations, our work addresses the problem in its full combinatorial nature.

We aim for hard feature selection by imposing a cardinality constraint on the selected features, reflecting the realistic scenario in which the user knows in advance the number of relevant features to extract. Modeling this requirement leads to a challenging non-convex mixed-integer nonlinear programming problem. To solve it, we develop decomposition algorithms that exploit the combinatorial properties of the resulting subproblems.

We compare the proposed approach with analogous models based on linear SVMs, and conclude with a discussion of possible extensions and generalizations of the methodology.

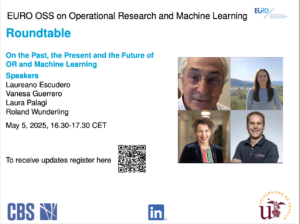

May 5, 2025, 16.30 – 17.30 (CET)

Gurobi Machine Learning

Abstract:

Gurobi-machinelearning is an open-source python package to formulate trained regression models in a gurobipy model that can then be solved with the Gurobi solver. We will present this package with a focus on trained neural networks (NN). Representing such as constraints in a MIP leads to large models. Optimization based bound tightening (OBBT) can be used to improve performance for solving such models. We will discuss how Gurobi has been extended to perform OBBT tailored to NN models and present performance results.

May 12, 2025, 16.30 – 17.30 (CET)

Learning Fair and Robust Support Vector Machine Models

Speaker: Prof Francesca Maggioni

Professor of Operations Research

Department of Management, Information and Production Engineering

University of Bergamo

Italy

Abstract:

In this talk, we present new optimization models for Support Vector Machine (SVM) and Twin Parametric Margin Support Vector Machine (TPMSVM), with the aim of separating data points in two or more classes. To address the problem of data perturbations and protect the model against uncertainty, we construct bounded-by-norm uncertainty sets around each training data and apply robust and distributionally robust optimization techniques. We derive the robust counterpart extension of the deterministic aproaches, providing computationally tractable reformulations. Closed-form expressions for the bounds of the uncertainty sets in the feature space have been formulated for typically used kernel functions.

To improve the fairness of traditional SVM approaches, we further propose a new optimization model which incorporate fairness constraints. These constraints aim to reduce bias in the model’s decision-making process, particularly with respect to sensitive attributes. The fairness-aware models are then evaluated against traditional SVM formulations and other fairness-enhanced approaches found in the literature.

Extensive numerical results on real-world datasets show the benefits of the robust and fair approaches over conventional SVM alternatives.

May 19, 2025, 16.30 – 17.30 (CET)

Sum of squares submodularity

Abstract:

Submodularity is a key property of set-valued (or pseudo-Boolean) functions, appearing in many different areas such as game theory, machine learning, and optimization. It is known that any set-valued function can be represented as a polynomial of degree less than or equal to n, and it is also known that testing whether a set-valued function of degree greater than or equal to 4 is submodular is NP-hard. In this talk, we consequently introduce a sufficient condition for submodularity based on sum of squares polynomials, which we refer to as sum of squares (sos) submodularity. We show that this condition can be efficiently checked via semidefinite programming and investigate the gap between submodularity and sos submodularity. We also propose applications of this new concept to three areas: (i) learning a submodular function from data, (ii) difference of submodular function optimization, and (iii) approximately submodular functions.

October 6, 2025, 16.30 – 17.45 (CET)

Contextual Stochastic Bilevel Optimization

Speaker: Prof Daniel Kuhn

Full Professor, Risk Analytics and Optimization Chair

College of Management of Technology

EPFL, Switzerland

Abstract:

We introduce contextual stochastic bilevel optimization (CSBO) – a stochastic bilevel optimization framework with the lower-level problem minimizing an expectation conditioned on contextual information and on the upper-level decision variable. We also assume that there may be multiple (or even infinitely many) followers at the lower level. CSBO encapsulates important applications such as meta-learning, personalized federated learning, end-to-end learning, and Wasserstein distributionally robust optimization with side information as special cases. Due to the contextual information at the lower level, existing single-loop methods for classical stochastic bilevel optimization are not applicable. We thus propose an efficient double-loop gradient method based on the Multilevel Monte-Carlo (MLMC) technique. When specialized to stochastic nonconvex optimization, the sample complexity of our method matches existing lower bounds. Our results also have important ramifications for three-stage stochastic optimization and challenge the long-standing belief that three-stage stochastic optimization is harder than classical two-stage stochastic optimization.

October 13, 2025, 16.30 – 17.45 (CET)

Towards Explainable (Integer) Optimization

Speaker: Prof Jannis Kurtz

Assistant Professor in Mathematical Methods and Applications in Data Science

Faculty of Economics and Business

Section Business Analytics

University of Amsterdam, The Netherlands

Abstract:

In recent years, there has been a rising demand for transparent and explainable AI models. Although significant progress has been made in providing explanations for machine learning (ML) models, this topic has not received the same attention in the Operations Research (OR) community. However, algorithmic decisions in OR are made by complex algorithms which cannot be considered to be explainable or transparent as we will argue in this talk. To tackle this issue we present two promising concepts to provide explanations for (integer) optimization problems. In the first part we introduce the concept of counterfactual explanations and show how it can be used to calculate explanations for linear integer optimization problems. We show that the resulting problem is Sigma_2^p-hard but can be solved in reasonable time for certain special cases. In the second part we present a model-agnostic method called CLEMO which can provide explanations for any type of optimization algorithms (exact or heuristic). The idea is to approximate the input-output relationship of an optimization algorithm by an interpretable ML model.

October 20, 2025, 16.30 – 17.45 (CET)

V YOUNG Session

Mixed-integer Smoothing Surrogate Optimization with Constraints for MINLP

Speaker 1: Marina Cuesta

Postdoctoral researcher at the Department of Statistics, Universidad Carlos III de Madrid, Spain

Abstract:

Mixed-integer nonlinear programming (MINLP) problems play a central role in many real-world applications. However, they are often challenging to solve, as finding high-quality solutions is computationally demanding. To address this, the research community has developed many tailored algorithms that exploit the problem structure to improve tractability. The Mixed-integer Smoothing Surrogate Optimization with Constraints (MiSSOC) algorithm arises in this context and tackles complex MINLPs through an innovative integration of data science into mathematical optimization. MiSSOC approximates complex functions with constrained smooth additive regression models, which might integrate a feature selection stage. Then, the surrogate MINLP is built by replacing the original complex functions with their approximations, and its structure is then exploited. We demonstrate MiSSOC on benchmark problems and a real-world case study, highlighting its effectiveness as a data-driven and knowledge-driven framework for solving challenging MINLPs.

Estimating Maintenance cost of Offshore Electrical Substations

Speaker 2: Solène Delannoy-Pavy

PhD Student, Ecole Nationale des Ponts et Chaussées, France

Abstract:

France aims to deploy 45 GW of offshore wind capacity by 2050. Both the ownership and maintenance of the offshore substations linking these farms to the grid are the responsibility of the French Transmission System Operator (TSO). In the event of an unscheduled substation shutdown, the TSO must pay significant penalties to producers. Failures occurring when weather conditions prevent access to the substation can quickly snowball into substantial losses. We present a decision support tool to estimate maintenance costs associated with strategic investment decisions, such as substation design selection. The problem is modeled as a Markov Decision Process, where each state reflects the asset’s degradation level and actions correspond to maintenance decisions. The objective is to optimize maintenance planning to minimize penalties, which are proportional to curtailed energy when capacity is limited. A multihorizon stochastic optimization model is introduced, featuring a bimonthly strategic horizon for advance planning and a daily operational horizon to capture penalty dynamics under uncertain weather conditions. A significant challenge arises from the exponential growth of the state space with the number of components, making it infeasible to solve the optimization problem exactly for real-life substation models. To address this, we propose an approximate solution approach that yields maintenance policies with strong performance.

A stochastic line search method for minimizing expectation residuals

Speaker 3: Qi Wang

Postdoctoral researcher at the Department of Industrial and Operations Engineering, University of Michigan, USA

Abstract:

An Armijo-enabled stochastic line search based on standard stochastic zeroth- and first-order oracles is proposed for minimizing L-smooth expected-value functions. Most existing rate and complexity guarantees for stochastic gradient methods in L-smooth settings impose restrictive requirements on steplength sequences, typically mandating that they be non-adaptive, non-increasing, or bounded above by 1/L. Such conditions severely limit applicability and often degrade performance, as they require prior knowledge of the Lipschitz constant and preclude the use of larger steps. In contrast, our method employs a backtracking line search and yields a steplength sequence that need not be non-increasing. We establish convergence rates for the expected stationarity residual, along with iteration and sample complexity bounds. Finally, our algorithm and analysis extend to composite objectives that include an additional nonsmooth function, provided its proximal operator can be efficiently evaluated.

November 3, 2025, 16.30 – 17.30 (CET)

To Scan or Not to Scan? Machine Learning Informed POMDPs for Intensive Stroke Care

Speaker: Prof Agni Orfanoudaki

Associate Professor of Operations Management, Saïd Business School

Management Fellow, Exeter College

University of Oxford, UK

Abstract:

Malignant cerebral edema is a life-threatening complication of large middle cerebral artery ischemic stroke which must be monitored closely through cranial CT scanning to inform clinical decision making for patients being treated in neurointensive care units. The onset and trajectory of edema are highly unpredictable and only partially observable until a scan is performed, requiring physicians to interpret many sources of information to decide when to conduct additional CT scans. Further, high operational costs, patient radiation exposure, and scanner scarcity make it detrimental to scan too frequently. We propose a novel approach to optimal transition screening by introducing one-state memory to a partially observable Markov decision process (POMDP) through a lifted state space which uses machine learning edema trajectory predictions to condense the high-dimensional observation space. In addition to theoretically proving and numerically demonstrating that our approach results in an optimal threshold-based screening policy, we validate our prescriptive model through a simulation study of real-world patient data. Our results suggest that the proposed screening policy enables more effective and efficient CT scanning than current clinical practice or alternative prediction-driven strategies.

November 10, 2025, 16.30 – 17.30 (CET)

On the solution of the 0/1 D-optimality problem and the Maximum-Entropy sampling problem

Speaker: Prof Marcia Fampa

Department of Systems Engineering and Computer Science, COPPE

Federal University of Rio de Janeiro – UFRJ, Brazil

Abstract:

The 0/1 D-optimality problem and the Maximum-Entropy Sampling problem are two well-known NP-hard discrete maximization problems in experimental design. Algorithms for exact optimization (of moderate sized instances) are based on branch-and-bound. We discuss key ingredients for the branch-and-bound: upper bounds, lower bounds, and a variable fixing strategy. The best upper-bounding methods are based on convex relaxations and we present ADMM (Alternating Direction Method of Multipliers) algorithms for solving these relaxations. This is a joint work with Jon Lee, Gabriel Ponte and Luze Xu.

November 17, 2025, 16.30 – 17.30 (CET)

Placing n points as uniformly as possible

Abstract:

Discrepancy measures are designed to quantify how well-distributed a given set of points is. Low-discrepancy constructions such as Sobol’ sequences are advantageous over random sampling, and are therefore extensively used in Quasi-Monte Carlo integration as well as design of experiment settings. But they are far from being optimal, as we will discuss in this presentation. More precisely, we will survey recent advances for the long-standing problem of placing n points as uniformly as possible. We will show constructive approaches to design point sets that are of much smaller discrepancy than traditionally used constructions.

The presentation is to a large extend based on the papers https://arxiv.org/abs/2311.17463 (to appear in the Proc. of the AMS) and https://www.pnas.org/doi/10.1073/pnas.2424464122 (PNAS 2025), both joint work with François Clément, Kathrin Klamroth, and Luís Paquete.

November 24, 2025, 16.30 – 17.30 (CET)

Noisy and Stochastic Algorithms for Constrained Continuous Optimization

Speaker: Prof Frank E. Curtis

Full Professor at the Department of Industrial and Systems Engineering

Lehigh University, USA

Abstract:

I will present recent work by my research group on the design and analysis of noisy and stochastic algorithms for solving constrained continuous optimization problems. I will focus on two types of algorithms. The first is designed to solve problems in which the constraints are defined by an expectation or a large finite sum. The algorithm exploits a progressive sampling approach in order to obtain improved worst-case sample complexity guarantees compared to an algorithm that solves the full-sample problem directly. The second leverages state-of-the-art guarantees from the deterministic optimization literature in order to obtain optimal active-set identification guarantees despite noise in the objective and constraint function and derivative values. I will present the results of numerical experiments that provide evidence for the applicability of the theoretical guarantees in practice.

December 1, 2025, 16.30 – 17.30 (CET)

Optimization for a Better World

Speaker: Prof Dick den Hertog

Faculty of Economics and Business

Section Business Analytics

University of Amsterdam, The Netherlands

Abstract:

This talk will showcase the potential of optimization in enabling non-governmental organizations (NGOs) to make a greater impact on the UN’s Sustainable Development Goals (SDGs). Several real-world applications with high impact will be discussed:

- The optimization of the food supply chain for the World Food Programme (WFP). By leveraging advanced optimization models, WFP is now able to deliver critical food aid to millions more people.

- An innovative optimization model for The Ocean Cleanup that strategically steers their vessels to accelerate the removal of plastic from the world’s oceans, contributing to the fight against marine pollution.

- Innovative projects to optimize geospatial accessibility to healthcare services, such as optimizing primary healthcare facility locations in Timor-Leste, determining stroke center placements in Vietnam, planning COVID-19 test centers in Nepal, and identifying ideal water well sites in Sudan. These efforts are driven by collaborations with leading organizations such as the World Bank, World Health Organization, Amref, and the American Red Cross.

In addition to presenting these impactful projects, this talk will touch on the novel research challenges they have sparked, some of which remain open and ripe for exploration.

January 27, 2026, 16.30 – 17.45 (CET)

VI YOUNG Session

Optimizing treatment allocation in the presence of network effects

Speaker 1: Daan Caljon

PhD Researcher, KU Leuven, Belgium

Abstract:

In Influence Maximization, the objective is to — given a budget — select the optimal set of entities in a network to target with a treatment so as to maximize the total effect. For instance, in marketing, the objective is to target the set of customers that maximizes the total response rate, resulting from both direct treatment effects on targeted customers and indirect, spillover, effects that follow from targeting these customers. Recently, new causal methods to estimate treatment effects in the presence of network interference have been proposed. However, the issue of how to leverage these models to make better treatment allocation decisions has been largely overlooked. Traditionally, in Uplift Modeling with no interference, entities are ranked according to estimated treatment effect, and the top k are allocated treatment. Since, in a network context, entities influence each other, this ranking approach will be suboptimal. To address this gap, we propose OTAPI: Optimizing Treatment Allocation in the Presence of Interference to find data-driven solutions to the Influence Maximization problem using treatment effect estimates. Our results show that our method outperforms existing treatment allocation approaches on both synthetic and semi-synthetic datasets.

Relative Explanations for Contextual Problems with Endogenous Uncertainty: An Application to Competitive Facility Location

Speaker 2: Jasone Ramírez-Ayerbe

CIRRELT and Department of Computer Science and Operations Research, Université de Montréal, Montréal, Canada

Abstract:

In this talk, we consider contextual stochastic optimization problems subject to endogenous uncertainty, where the decisions affect the underlying distributions. To implement such decisions in practice, it is crucial to ensure that their outcomes are interpretable and trustworthy. To this end, we compute relative counterfactual explanations, providing practitioners with concrete changes in the contextual covariates required for a solution to satisfy specific constraints. Whereas relative explanations have been introduced in prior literature, to the best of our knowledge, this is the first work focused on problems with binary decision variables and subject to endogenous uncertainty. We propose a methodology that uses the Wasserstein distance as a regularization term, which leads to a reduction in computation times compared to its unregularized counterpart. We illustrate the method using a choice-based competitive facility location problem, and present numerical experiments that demonstrate its ability to efficiently compute sparse and interpretable explanations.

On a Computationally Ill-Behaved Bilevel Problem with a Continuous and Nonconvex Lower Level

Speaker 3: Yasmine Beck

Eindhoven University of Technology (TU/e), Department of Industrial Engineering and Innovation Sciences (Operations Planning, Accounting and Control Group), the Netherlands

Abstract: